Artificial Intelligence in media and entertainment has a vast range of uses that can enhance content creation, content management, and user experience beyond what was capable only a few years ago. With this extensive power, ethical questions are raised regarding its implementation. At this year’s Entertainment Evolution Symposium organized by MESA, FPT Software’s Chief Artificial Intelligence Officer, Phong Nguyen, and Managing Director of Communications, Media & Entertainment, Ira Dworkin, discuss the potential applications for AI in media and associated risks.

How does AI relate to media and entertainment?

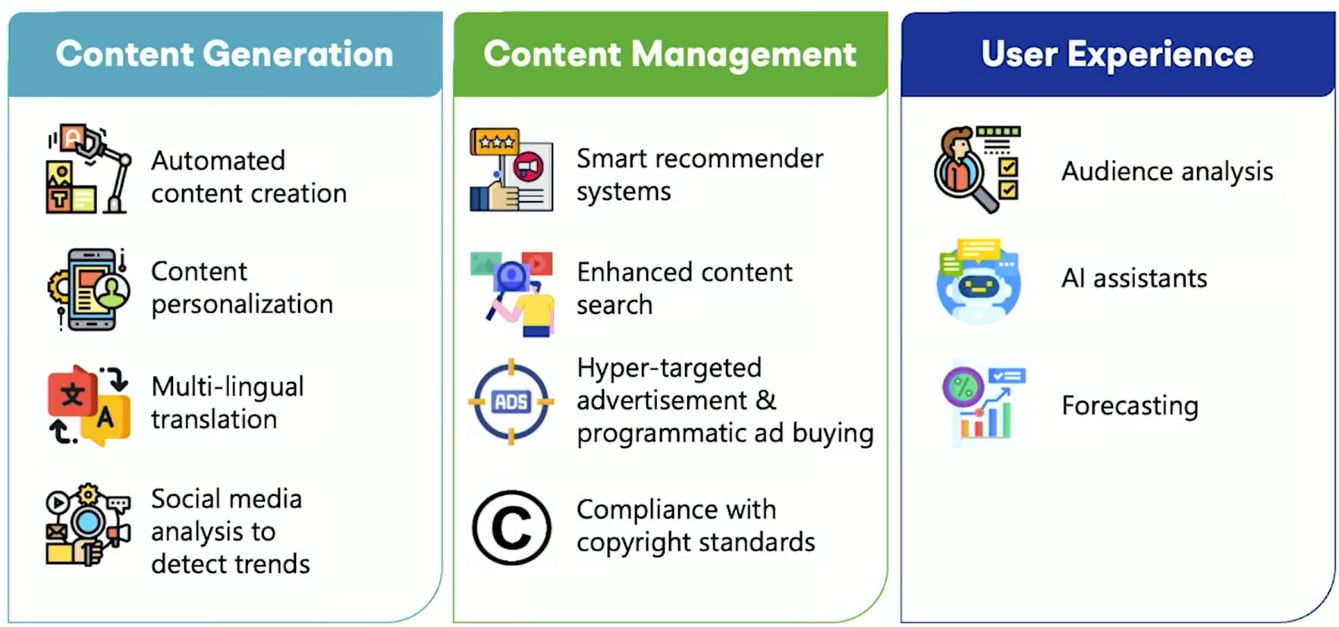

AI in the media and entertainment space can be broadly segmented into three basic categories. Each of these represents a part of the lifecycle of a given asset and what it provides to both the creator and the user. AI use in content generation allows for greater productivity when creating or preparing assets for distribution. The process can be sped up through automation – speeding the encoding process, automatically creating tags in the content, and speeding the previously time-consuming translation and subtitling processes.

Content management systems allow organizations to manage the distribution, rights, and metadata associated with assets. AI systems can leverage metadata and usage information to recommend the specific content to highly targeted audiences and can enable use of richer metadata, enabling content distributors to build more compelling systems that allow their viewers to surface relevant content more rapidly. One of the most important areas where AI can contribute is compliance. Because standards vary globally, managing this compliance of a content asset that has distribution across a wide geography can be taxing and time-consuming without AI. Automation tools can aid creators in efforts to make appropriate content for any given market – identifying and even filtering out scene’s that have explicit content that may not be permitted in certain markets.

Finally, AI can help to improve the overall user experience. AI models can help ensure that relevant content is being provided to users. Additionally, AI assistants such as chatbots can enable cost effective ways to resolve customer issues quickly, increasing overall satisfaction with a service. Detailed understanding of end user preferences and usage facilitates more accurate forecasting and production planning for content producers as well as more targeted messaging for advertisers.

The Good, The Bad, and The Ugly

As noted above, there are a wide range of positive use cases for AI in Media & Entertainment. Other examples were provided in the MESA section – including public service campaigns, where AI was used to automatically translate a message from a celebrity into a range of languages. Creating catered content for a large demographic or even creating new content based on what humans have made are also key examples. The intersection between human creations and AI-made developments sets a foundation that outlines the trajectory of content creation. During the session, Nguyen and Dworkin displayed a video of a song composed with AI using The Beatles style of music. The human aspect (The Beatles discography) set the foundation for the AI’s creation, “Daddy’s Car”. While these are positive applications, it’s easy to see how the technology can be used in a similar fashion but with negative consequences – raising ethical considerations.

To further illustrate issues that may be caused by AI misuse, examples were provided of recent cases involving malicious misinformation with the sole purpose of deceit, defying, and confusing audiences. Misuse can include censoring opposing views or statements to gain a competitive advantage, using AI to rewrite history (portraying previously well-documented events differently), or leveraging AI-generated videos and images that can replicate celebrity or politician images and voices (referred to as “deepfakes”), but are used to deliver messages that the real person would typically not deliver. The danger associated with using AI to create false narratives or manipulate information must be addressed.

A less obvious risk associated with ethical considerations of AI relates to unintentional but real human bias. In this instance, human biases may be unintentionally built into AI models. “Machine learning models are only as good as the data used to train them”, Nguyen says. Although AI is capable of expanding upon human functions, ideas, and goals, the foundation on which it begins must be met with awareness regarding these ethical risks.

Risk Mitigation

To create positive AI, Nguyen discusses three methods to approach the aforementioned potential ethical pitfalls:

- Technology Approach: Building a model with robustness, clarity in goal, and fairness.

- Operational Approach: Create a safeguard function to check the performance of the AI model similar to QA in software.

- Philosophical Approach: Developing AI goal alignment with mankind

Applying these approaches with the correct model for the given needs of an organization is what creates desired outcomes when implementing automation; simply understanding the potential ethical risks when leveraging AI and Machine Learning can help companies take proactive steps to avoid them.

Additionally, thought should be given as to whether to use a pre-built model (such as those offered by the major cloud providers), whether to build a fully custom ML model, or whether to use some combination. The appeal of the pre-built models is obvious – get to market faster with less software development investment. However, pre-built models may limit fine tuning and may not fully address a the organization’s business needs; in these cases, a hybrid approach – leveraging a pre-built model as a base and then extending with customizations – could make the most sense. As Nguyen described them, pre-built tends to function more like a fast-food meal whereas a custom model can be compared to a home-cooked meal. While a pre-built model will ultimately be cheaper and quicker, a custom model provides control and accuracy at the cost of time and financial spending. For these reasons, a hybrid models tend to be a common go-to among organizations.

Organizational Considerations

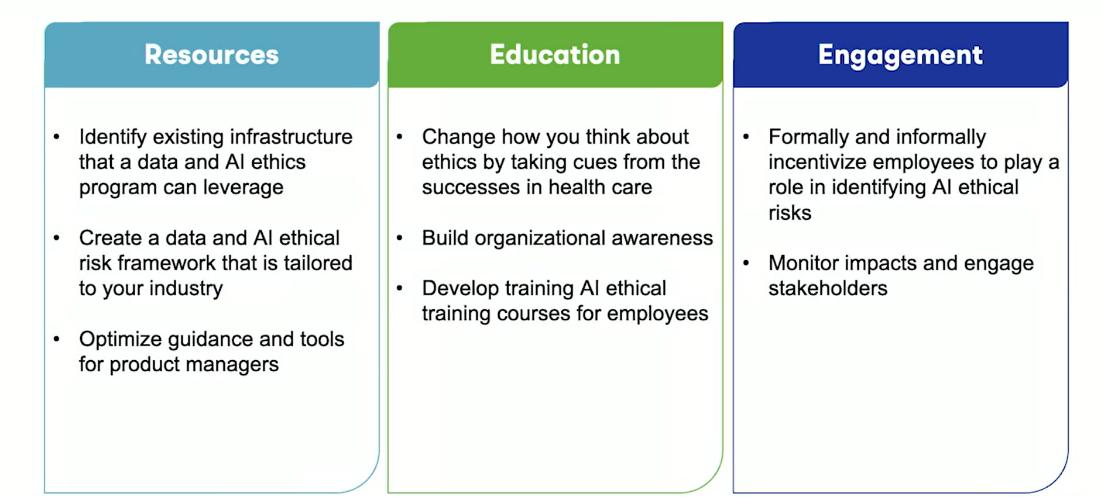

Resources, education, and engagement are the three parts of ethics to focus on within an organization when approaching AI. Identifying resources allows for preparation to identify infrastructure, create a framework, and optimize guidance. Education develops the overall organizational understanding of AI ethics and how to meet the business needs while minimizing ethical risk. Finally, engagement is the active implementation of ethical AI processes.

The power of AI should not be overlooked and the process in which it is handled is a careful combination of preparation, attention, and education. Creating an organizational culture and awareness around ethical considerations will not only prevent public relations disasters, but it will also ensure customers can develop trust with your organization while getting the best artificial intelligence has to offer.