Generative AI represents a new frontier in artificial intelligence (AI). Unlike traditional AI, which focuses on extracting answers from existing data, Generative AI can create new content such as images, text, 3D graphics, and even videos. However, without proper risk management, threat actors can potentially exploit vulnerabilities from Generative AI within organizations.

Understanding different generative AI security challenges

1. Data breach due to human errors

One of the main reasons for a security breach is due to human errors. According to a study by IBM, human error is the main cause of 95% of cybersecurity breaches. In the context of security, human errors are unintentional actions - or lack of action - by users that lead to, spread, or allow a security breach to happen. With the increasing use of ChatGPT, employees may disclose sensitive work information, such as internal source code, copyrighted materials, trade secrets, personally identifiable information (PII), and confidential business information. Despite the potential threats, only 38% of companies currently using Generative AI have measures in place to mitigate cybersecurity risks.

One of the incidents involved a data leak from Samsung, where the company’s employees accidentally disclosed confidential information while utilizing ChatGPT for assistance within the workplace. Notably, an employee inserted confidential source code into ChatGPT while seeking error validation, and another employee shared a meeting recording to convert it into presentation notes. In response, Samsung has promptly taken measures to address the situation. This includes restricting the upload capacity to 1024 bytes per individual on ChatGPT and investigating the employees involved in the data breach. Additionally, Samsung is considering the development of an in-house AI chatbot to mitigate the likelihood of future breaches.

2. Security vulnerability within generative AI tools

AI-generated tools themselves can contain vulnerabilities that expose companies to cybersecurity attacks. Generative AI, such as ChatGPT, is more vulnerable because of the complexity of its models. Generative AI models are often intricate, making them challenging to be fully secure. The complexity introduces a larger attack surface, which increases the likelihood of undiscovered vulnerabilities that security perpetrators can exploit. According to research, 46% of companies believe that generative AI could heighten their organization's susceptibility to attacks.

Besides, while generative AI tools can generate responses that appear human-like, they may lack a deep understanding of context. Attackers could exploit this limitation by crafting inputs designed to confuse or mislead the AI, potentially leading to undesirable outcomes. Additionally, Generative AI models often lack explainability, making it difficult to understand how they generate responses or identify potential vulnerabilities. This challenge can hinder business efforts to detect and mitigate security risks effectively.

3. Data poisoning – The poison to cybersecurity

Data poisoning refers to the malicious manipulation of training data used in developing AI models, aiming to influence the model's behavior negatively. During a data poisoning attack, hackers inject misleading or malicious information into the training dataset, causing the AI model to learn inaccurate patterns and make incorrect predictions.

There are 4 main types of data poisoning, including:

- Availability attack: In this type of attack, the entire model is corrupted, resulting in false positives, false negatives, and misclassified data samples

- Backdoor attack: During a backdoor attack, an actor introduces backdoors, such as a set of pixels in the corner of an image, into a set of training examples. This triggers the model to misclassify these examples and impact the quality of the output.

- Targeted attack: In targeted attacks, the model performs well for most samples, but few are compromised. This makes detection difficult due to the limited visible impact on the algorithm.

- Subpopulation attack: Subpopulation attacks, similar to targeted attacks, impact specific subsets of data. However, they influence multiple subsets with similar features while accuracy remains the same for the rest of the model.

Strategies for businesses to prevent Generative AI data threats

- Due to generative AI’s security vulnerabilities, safeguarding data becomes highly crucial. To tackle the challenges above, companies should:

- Establish clear policies regarding using generative AI tools and the consequences of breaching security protocols.

- Encrypt data before inputting it into Generative AI models and ensure that encrypted data is securely stored and transmitted.

- Provide regular training programs to educate employees about the importance of cybersecurity, the risks associated with data leaks, and best practices for handling sensitive information.

- Maintain open lines of communication between employees, managers, and IT security teams.

- Develop a comprehensive incident response plan that outlines the steps to be taken to minimize the consequences.

- Make sure that Generative AI models are updated with the latest security patches.

FPT Software – Your trusted partner for a secure AI-powered Data analytics platform

Power Insights – Maximizing data potential with AIFPT Software's PAS was established to provide modern tools and high-quality data analytics services based on the latest technology trends to help businesses maximize the potential of data for informed decision-making and drive development. Leveraging the robust platform of Microsoft's Azure OpenAI, Power Insights utilizes Generative AI based on FPT Software's data analysis and processing expertise to extract valuable insights from data through simple conversational language interactions instead of relying solely on analytical tools.

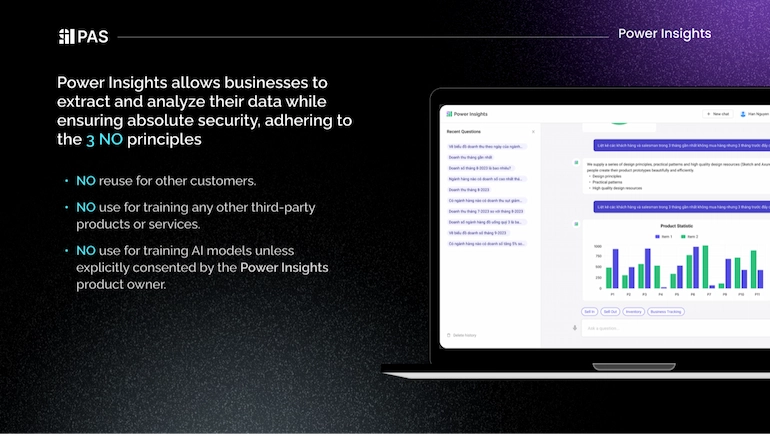

What makes Power Insights stand out?

Compliance with international privacy regulations

This application is designed to strictly adhere to all relevant data protection laws and regulations, such as GDPR and EU regulations. Information is always encrypted during transmission, and the data sources provided to Power Insights are prioritized and stored in customer data centers to ensure the security of the information.

Secure authentication

Power Insights uses oauth2 and Single Sign-On (SSO) security systems. OAuth 2.0 provides access authorization for applications to share resources without requiring login information authentication. Single Sign-On (SSO) allows users to log in once to access various application systems. SSO with a server-side module can identify accesses to applications as belonging to a user.

Ease of use for all business sizes

These methods allow small and medium-sized businesses or organizations to manage their login information more easily. This saves users time and avoids the hassle of remembering numerous login accounts for various applications. In particular, with the multi-tenancy management feature, customers can log in with multiple tenants on the same application.

The services and features of Power Insights are based on the data provided by businesses but still ensure absolute security following the principles of not reusing for other customers, not using for training other third-party products or services, and not using for training AI models without customer consent.

Securing the future of data with Power Insights

In conclusion, companies need to enhance data security to maximize the potential of Generative AI to gain a competitive edge, operational efficiency, and innovation. Therefore, businesses need a trusted partner on this journey to secure data.

Partner with PAS to create a safe and secure working environment for business in the new era of intuitive network infrastructure.

To understand more about the demo, don’t hesitate to contact us here: https://paschat.azurewebsites.net/login