Artificial Intelligence (AI) has been thriving the past few decades, that is for sure. Lots of things are now automated, or on their way to be, in order to make our lives easier and smoother. While computational power revolutionized machine learning as the heart of AI, data sets the foundation of such revolution. It is no exaggeration to say data is the fundamental element that determines the efficiency of any AI application. By applying the suitable tools to analyse the data generated, a company can obtain valuable information and get a better understanding of its performance.

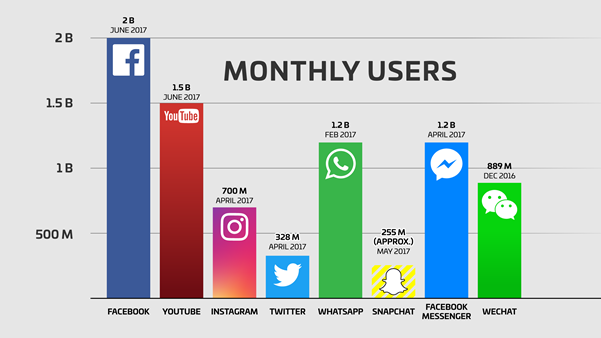

On the other hand, with the increasing usage of internet, users have left various traces of themselves over the web. Billions of people are using at least 3 to 4 social networks with growing exposure of personal information. It is easier than ever to get a hang on a person based on their surfing habits or search history. It is all fun and easy when one’s personal data is kept secured, but once it is treated as a currency of the technology world, users’ privacy is at risk like never before.

Monthly users of social networks in 2018 [1]

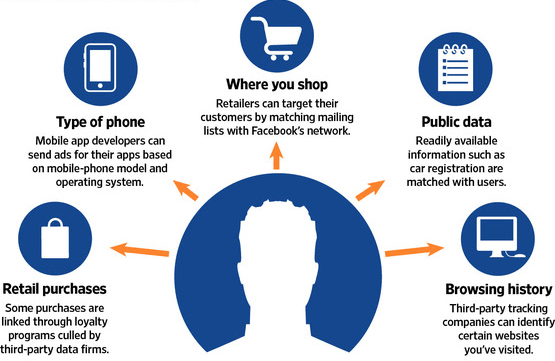

For marketing purposes, companies can track customers’ social accounts, searching history, shopping habits to form profiles and put them into targeted groups. The consequences can be as harmful as an advertisement on their frequent websites to as devastating as identity thief. Unfortunately, one of the biggest social media platforms on Earth, Facebook, allows third-party application using Facebook Login to access its users’ data. Millions of whom do not acknowledge that their information is public, until Facebook had what can be called its biggest scandal last year. Cambridge Analytica, a company working under Donald Trump’s election campaign, gained access to private information and analysed it in a way that they are able to understand the personalities of American citizens and influence their behaviours. The idea is to get targeted users based on their personality traits, which were acquired by interpreting their networks, favourite pages and activities. More than 50 million American users were manipulated in such way that could be considered privacy violation, for which Facebook CEO Mark Zuckerberg was called for multiple testifies before the Congress in the matter of his work with Cambridge Analytica.

(Related: The Four Big Challenges to Data Management in HealthCare)

What Facebook knows about you? Basically everything [2]

When AI has far outperformed human at handling large datasets, people as users and customers must be aware of the situation technology has advanced to and protect personal information for their own sake of privacy. With tools being able to tell age, location, personality, some of which can be very sensitive, based on their public account, people’s privacy is now more exposed than ever before. What these applications cannot tell is whether or not they have crossed the line. They can only put on the best performance to a given task without realizing the consequences.

One aspect from which we can view this issue is that unlike human, machines do not have morality. There have been multiple studies showing AI discrimination age, gender, race, sexual orientation, etc. Researchers have found that the latest AI models are 1.5 times more likely to flag tweets written by African Americans as “offensive” [3]. Or in another study, in five popular datasets for studying hate speech, there are apparent racial bias against black speeches [4] What machines can do is logically optimizing the situation given and provoke actions. The question posed now is whether or not we can teach machines morality. While us human act based on our feelings, machines learn from data training and cost-benefit calculations. In order to give a sense of morality to machines, human has to first understand it themselves and transform it into a form that computers can process. It is easier said than done because morality is something very abstract that not everyone can define it, not to mention defining so precisely that can be programmed into computers. This is a demanding task, but not impossible. We don’t know what the future holds and how far we can progress. What we do know is that for something as beneficial and promising as Artificial Intelligence, we will spend increasingly more effort into developing them, with the ultimate goal to enhance our lives. This will bring positive impact if we know how to exploit, as well as how to manage it.

For more readings on technology, click here to explore!

Reference

|

[1] |

"Facebook now has 2 billion monthly users… and responsibility,," 27 06 2017. [Online]. Available: https://techcrunch.com/2017/06/27/facebook-2-billion-users/. [Accessed 25 08 2019]. |

|

[2] |

"How to Use Facebook Audience Insights for Better Targeting," 16 03 2018. [Online]. Available: https://sproutsocial.com/insights/facebook-audience-insights/. [Accessed 25 08 2019]. |

|

[3] |

M. Sap, D. Card, S. Gabriel, Y. Choi and N. A. Smith, "The Risk of Racial Bias in Hate Speech Detection," Proceedings of the 57th Conference of the Association for Computational Linguistics, pp. 1668-1678, 2019. |

|

[4] |

T. Davidson, D. Bhattacharya and I. Weber, "Racial Bias in Hate Speech and Abusive Language Detection Datasets," arXiv preprint arXiv:1905.12516, 2019. |